Semi-Annual Usability Action Report

Submitted by: CWTSatoTravel ··· January - June 2019 ··· Edition 5.1

Revision History

| Contributor/Author | Edition | Date | Comments |

|---|---|---|---|

| John Utter, Laura Kistler | 1.0 | 07 December 2015 | Initial |

| John Utter, Laura Kistler | 1.1 | 26 January 2016 | Revisions based on PMO feedback |

| John Utter, Laura Kistler | 2.0 | 01 July 2016 | Updated |

| John Utter, Laura Kistler | 2.1 | 02 August 2016 | Revisions based on PMO feedback |

| John Utter, Laura Kistler | 2.2 | 09 January 2017 | Updated |

| John Utter, Laura Kistler | 3.1 | 03 July 2017 | Updated |

| John Utter, Laura Kistler | 3.2 | 15 January 2018 | Updated |

| John Utter, Laura Kistler | 4.1 | 15 July 2018 | Updated |

| John Utter, Laura Kistler | 4.2 | 15 January 2019 | Updated |

| John Utter, Laura Kistler | 5.1 | 02 August 2019 | Updated |

Purpose and Overview

The following describes the CW Government Travel., Inc. (d.b.a CWTSatoTravel) Semi-Annual Usability Action Report (SAUAR) for ETS2 E2 Solutions. This document is required by section D.40.hh of the GSA ETS2 Master Contract GS-33-FAA009.

Twice per year, CWTSatoTravel delivers a SAUAR to the PMO within 30 days prior to the Semi-Annual Performance Review. The SAUAR is provided to report on usability and user experience of the ETS2 E2 Solutions product, detailing usability assurance activities during the previous six months, and communicate status and progress of the usability program to ETS2 E2 Solutions system stakeholders. Each SAUAR will describe any changes to key personnel in our cross-functional usability team since the previous SAUAR, provide a summary of any completed system changes or enhancements that are intended to improve usability, as well as any usability or accessibility (e.g., Section 508) audit findings performed during the period of review. Recurring usability indicators — measures of efficiency, effectiveness, web performance and customer satisfaction — as well as the major findings from ongoing usability evaluation activities will be detailed. Known plans to correct or mitigate usability and accessibility issues will be documented in the SAUAR in the context of an updated ETS2 E2 Solutions product roadmap.

As specified in D.40.hh, the SAUAR reports on CWTSatoTravel’s disciplined and iterative approach to User-Centered Design (UCD) and Usability Engineering (UE), including but not limited to efforts to complete user-centered design and usability engineering analyses, evaluations/tests, corrective actions to mitigate identified usability problems, and/or upgrades. The Semi-Annual Usability Action Report shall provide updates on the following:

-

Changes in key Contractor ETS2 UCD/UE team personnel;

-

Overview of any user interface design changes or enhancements;

-

Documentation of the findings from any Accessibility Audits or other Section 508 compliance activities, including any corrective actions planned or completed;

-

Updates to any recurring usability indicators being tracked on a semi-annual basis in keeping with the Contractor’s User-Centered Design/Usability Assurance Plan, such as:

-

Efficiency indicators, such as:

- Time taken by users to complete ETS2 tasks (such as those specified in ETS2 Use Cases)

- Time taken by users to complete end-to-end ETS2 transactional processes

- Time taken by users to access online help information

-

Effectiveness indicators, such as:

- User rates of completing ETS2 tasks

- User rates of abandoning tasks

- Various errors or types of errors

-

Satisfaction indicators, such as:

- Analysis of comments and questionnaire data from Usability Tests

- Customer satisfaction survey data

- User focus group and/or interview data

-

-

Documentation of the findings from any usability evaluation activities undertaken in the preceding six months, such as:

- Usability audits based on inspection by qualified usability professionals

- Task-based usability testing with representative users attempting typical ETS2 tasks

- Observations of users in their work environment

- User customer satisfaction surveys

- Analysis and reviews of click-stream web logs, including error conditions, online help access incidents, and task times and completion/abandonment data

- Analysis and reviews of Help Desk incidents logged as usability issues

- Feedback gleaned from users during ETS2 training

-

Contractor progress in mitigating identified usability issues in relation to the Contractor’s software development life cycle, release schedule, and Technology Refresh plans.

Executive Summary

CWTSatoTravel is committed to incorporating best practices in usability improvement, user-centered design, and usability engineering into the ETS2 E2 Solutions software development lifecycle and service delivery. These practices are part of our usability-centered continuous improvement program, involving iterative and ongoing usability improvement activities. Our goals are measurable continual improvements to the efficiency, effectiveness, and satisfaction levels of the ETS2 E2 Solutions product and service. The period of performance (POP) of this SAUAR is January - June 2019. During this POP, the E2 Solutions team continues to focus resources on agency implementations, development of new features and functionalities, and expanding usability assurance activities. Key points within these areas:

- There have been no changes to key UCD/UE personnel;

- Several changes have been implemented to improve usability within E2 Solutions and the online booking engine (GetThere);

- The restructured E2 Solutions User Interface continues to have both significantly increased the amount of work completed per user session, in significantly reduced time;

- CWTSatoTravel continued collaboration following data and guidance learned from the GSA’s ETSNext initiative;

- E2 Solutions continues development and testing to meet WCAG 2.0 AA success criteria under the Revised Section 508 Standards;

- Migration of web analytics from Adobe SiteCatalyst to Google Analytics complete;

- Information architecture evaluations and prototype redevelopment of Mobile Shop First and User Profile is underway;

- E2 Solutions is seeing significant growth in user visits on a mobile or tablet device, now almost 9% of sessions;

- UAM was surprised that observed gains in user efficiency metrics for Travelers (reductions in error rates and time taken to complete critical tasks) had not translated as expected into increased Customer Satisfaction Survey (CSS) score, as it had for Arrangers and Approvers;

- CWTSatoTravel continues to be concerned about the majority of CSS respondents from one or two agencies and one user role;

- E2 Solutions continues to perform within UAM goal of below 2 second page loads, with a speedy overall average page load time of 1.79 seconds;

-

Users at these locations appear to be connected to especially restrictive or slow connections, likely behind overloaded network hardware, proxy or software policies, or other localized internet traffic bottlenecks. This information will be provided to agency POCs to help those offices focus IT infrastructure investment.

- DOC offices in Seattle, WA and Missoula, MT

- DOS offices in Stuttgart, Germany and Atlanta, GA

- EEOC offices in Kansas City, MO and Washington, DC

- DOT offices in Phoenix, AZ

- SSA offices in Woodlawn, MD

Contractor ETS2 UCD/UE Team Key Personnel Updates

Key Personnel

During this reporting period, there were no changes to key personnel as identified in the ETS2 Master Contract on the E2 Solutions UCD/UE team:

- John Utter, Usability Assurance Manager (UAM)

- Ann Reubish, E2 Solutions Product Manager

- Laura Kistler, Director, Products & Services Marketing

UCD/UE Cross-functional Team

The UCD/UE team consists of a cross-section of E2 Solutions team members from Quality Assurance, Product Support, Development, and Business Analysis. The UCD/UE team continues to work with our third-party vendors, GetThere, our online booking tool provider, and Oracle Web Services (OVsC), our help desk issue tracking tool, to ensure usability issues with their products are documented and addressed.

Participation in usability efforts extends beyond the UCD/UE team. Program Managers, Trainers, Help Desk Analysts, Business Analysts, Developers, Quality Assurance Analysts, and Product Owners support usability improvements in their departments. CWTSatoTravel has specific teams within these groups focused on usability efforts, as does GetThere. Our UAM “runs point,” and reports to the director-level. The UAM and directors work across teams to drive usability and accessibility improvements across product lines, teams, partners, and subcontractors.

Usability testing is proctored at agencies by the UAM, supported by other employees and partners. We endeavor to engage members of the CWTSatoTravel development and quality assurance teams in this activity to develop a closer connection between the development team and the user community. These cross-functional groups gain deep insight by speaking directly with users of the software they create. The UAM designs these sessions to be a high-growth opportunity and welcomes the GSA’s participation also.

- CY2016 — in-person testing was conducted by the UAM, Director, Trainers, Program Managers, and GetThere representative.

- CY2017 — the UAM, Director, Software Development Manager, Senior Business Analyst, and Senior Product Director executed in-person and remote UX evaluations during the POP. GetThere representatives also attended and executed UX evaluations.

- CY2018 — in-person and remote testing was conducted by the UAM, Director, Program Managers, Senior Business Analyst, QA Manager and GetThere; focused on users at major and minor agencies new to participating in evaluations.

- CY2019 — remote testing was conducted by the UAM and GetThere representatives. Further in-person testing scheduled for Fall 2019.

Overview of Major User Interface Design Changes or Enhancements

During this period of performance, CWTSatoTravel supported multiple product releases for both E2 Solutions and from our online booking solution provider GetThere. A summary of these releases is shown in Table 1.

Table 1 - Product Release Summary

| Type | Number | Date |

|---|---|---|

| E2 Solutions | 18.4.01 | January 2019 |

| E2 Solutions | 19.1 | April 2019 |

| GetThere | 19.01 | January 2019 |

| GetThere | 19.02 | February 2019 |

| GetThere | 19.03 | April 2019 |

| GetThere | 19.04 | May 2019 |

| GetThere | 19.05 | June 2019 |

While these releases included many changes to the E2 Solutions software to support new functionality and defect resolution, several changes were introduced that will have a positive impact on the user experience targeted to meet goals of the ETSNext initiative, including:

- Reduces time in the system

- Reduces task complexity

- Improves user confidence in the system

- Improves user communication and direction

- Encourages policy compliance

- Simplifies managing travel

Table 2 provides a list of notable items, along with their associated release and a general description of the expected impact. Details for each item are described in the remainder of this section.

Table 2 – List of Major Usability Improvements

| Item ID | Release | Summary | ETSNext Category | Impact |

|---|---|---|---|---|

| Multiple | Ongoing | Restructured E2 Solutions User Interface | Reduces task complexity Improves user confidence in the system |

Simplifies and modernizes TAVS user interface and page style, for page clarity and user focus. |

| Multiple | Ongoing | Updates to conform to Web Content Accessibility Guidelines (WCAG) 2.0 AA | Reduces time in the system Reduces task complexity Improves user confidence in the system |

Accessibility remediation progress to meet Section 508 Refresh success criteria and make the application more usable to all users of E2 Solutions. |

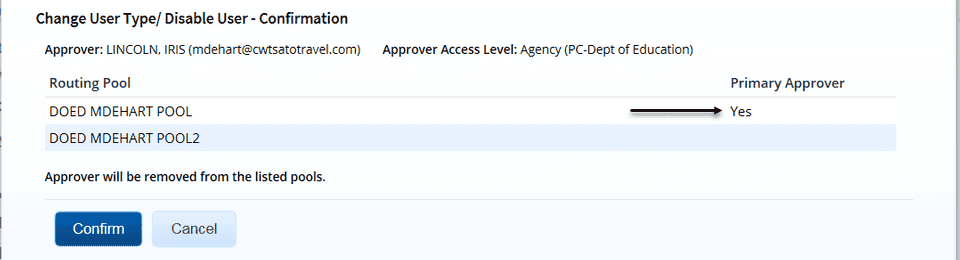

| US13218, US13221, US13222, US13529 | E2 Solutions 18.4.01 | Change/Disable Approver | Simplifies managing travel Reduces task complexity |

An administrator can now disable an approver or change the User Type field from Approver to Traveler or Auditor, even if the approver is a member of a routing pool. |

| TD60324/ US13774 | E2 Solutions 19.1 | Login Error Messaging | Improves user communication and direction | When a user entered an incorrect user name or password on the Login page, focus is shifted to the error message for screen readers following the error messaging pattern found within E2 Solutions. |

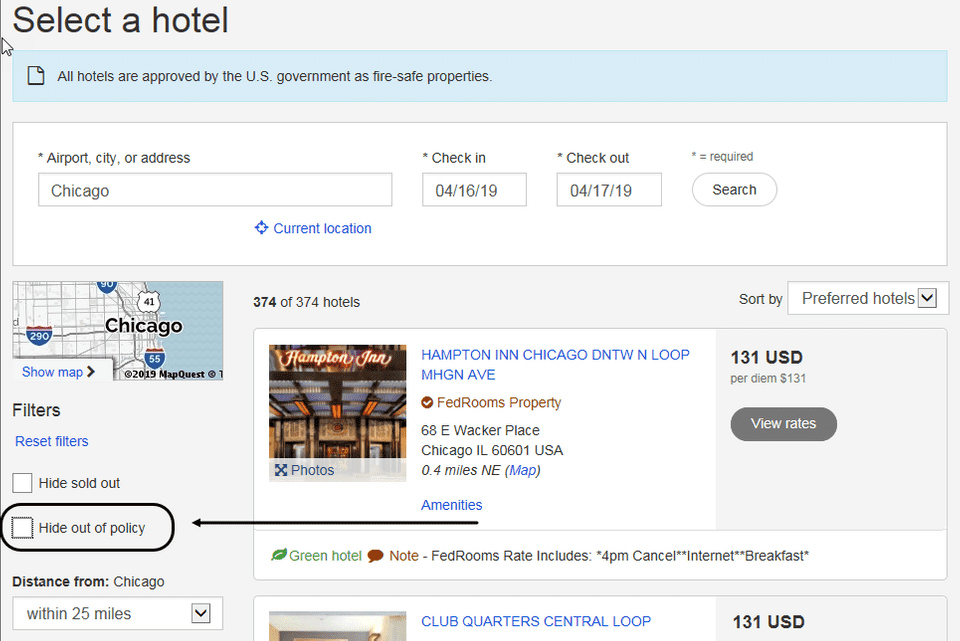

| N/A | GetThere 19.02 | Out of Policy Filter | Encourages policy compliance Reduces task complexity |

A Hide out of policy check box was added to the Filters section on the Select a Hotel page. |

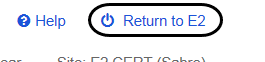

| N/A | GetThere 19.02 | Renaming “Purchase Trip” and “Log out” | Improves user communication and direction Improves user confidence in the system |

In an attempt to increase clarity for all users of the online booking tool, the Purchase Trip and Log out buttons in GetThere were renamed to Complete Reservation and Return to E2, respectively. |

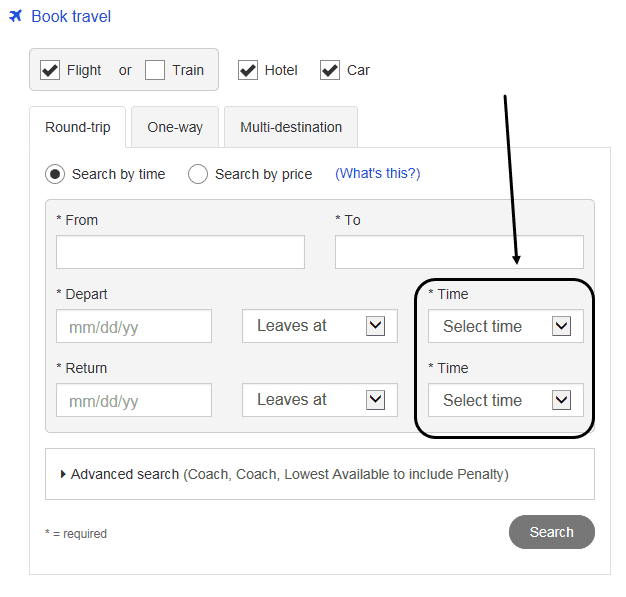

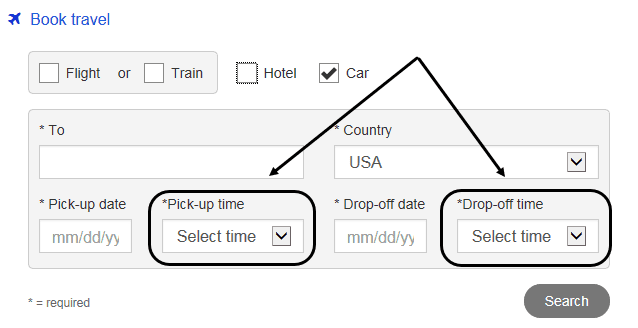

| N/A | GetThere 19.03 | Required Travel Time/No Default Time | Improves user communication and direction Improves user confidence in the system |

Site administrators can now require travelers to select specific times when establishing their search criteria on the OBT home page. |

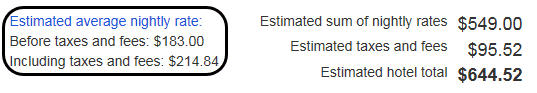

| N/A | GetThere 19.04 | Average Nightly Rate | Improves user communication and direction | Trip Review and Checkout page will now display the average nightly rate before taxes, as well as the rate with taxes included. |

Restructured E2 Solutions User Interface

Documented in detail in Release Notes and previous SAUAR, the E2 Solutions team is continuing its modernization effort to update the E2 Solutions user interface. The changes are focused on how information is presented while preserving the baseline content and workflow. Our goals for the user interface include:

- Reduce screen “noise” and declutter the workflow.

- More cohesive integration between the travel and expense application and the online booking tool.

- Meet Revised Section 508 Refresh accessibility standards in effect January 18, 2018 for all new functionality.

The updated UI was first made available by feature flag in March 2018; Agency customers could turn on the new look when they were ready. All Agencies migrated automatically in the fall of 2018. The investments in clear and consistent UI and fast application response appear to return gains in user-work completed in significantly reduced time. Measurable task results information can be found in Efficiency.

Revised Section 508 — Web Content Accessibility Guidelines (WCAG) 2.0 AA

E2 Solutions continues to make updates to conform to Web Content Accessibility Guidelines (WCAG) 2.0 AA success criteria. These updates enhance accessibility to Information and Communication Technology (ICT) for people with disabilities and meet the Revised Section 508 standards (published 18 January 2017).

Change/Disable Approver

A system administrator with Update Routing Pools permission can now disable an approver or change the User Type field from Approver to Traveler or Auditor, even if the approver is a member of a routing pool. Currently, the administrator must remove the approver from any pools prior to performing this action. This was one of the most requested functionalities (or features) from customer user interviews.

Out of Policy Filter

A Hide out of policy check box was added to the Filters section on the Select a Hotel page to allow users to quickly see the available hotel options and reduce the overall booking time. Selecting this check box filters the results returned on the page to exclude those hotels flagged as “out of policy.”

The filter removes out-of-policy hotels from the hotel availability page. It will not, however, remove any out-of-policy rooms from the hotel details/rate page of an otherwise in-policy hotel. Out-of-policy rooms are still clearly identified to the user via icons and text.

Renaming “Purchase Trip” and “Log out” actions

In an attempt to clarify language for all users of the online booking tool, modifications were made to the Purchase Trip and Log out buttons in GetThere.

“Log out” in the header of every online booking tool page was renamed “Return to E2”. This change should indicate to the user that the online booking tool’s workflow will close and the user will return to E2.

The “Purchase Trip” button on the Trip Review and Checkout page was changed to “Complete Reservation”. This alteration should eliminate any confusion or anxiety that may have occurred because of the word purchase.

Required Travel Time/No Default Time

We found during user studies that many travelers ignored the default time selector which impacted the shopping results when searching by time. Site administrators can now require travelers to select specific times when establishing their search criteria on the OBT home page. The Time fields will default to Select time and an asterisk (*) will display next to the field label, indicating the field is required.

If the user neglects to select a time in one or both of the fields before clicking Search, the missed field is highlighted and the following error message displays at the top of the page: *You must select the time of your travel. *This will ensure more relevant options are provided in the search results.

Average Nightly Rate

Prior to the 19.04 release, only a hotel’s average nightly rate after taxes was displayed in the Hotel Details section of the Trip Review and Checkout page. Trip Review and Checkout page will now display the average nightly rate before taxes, as well as the rate with taxes included. This change should help users identify the average room rate, especially when rates change during their stay.

Upcoming Usability Improvement Activities

As of publication, upcoming usability improvement activities include:

TAVS

- Implement short-term usability improvements such as those identified in the ETSNext roadmap

- Continue collaboration with the GSA’s ETSNext Program recommendations

- Encourage the adoption of agency-configurable settings that may result in usability improvements

- Continue to expand metrics recording and segmentation within Google Analytics

- Develop incremental usability improvements resulting from usability indicators and user evaluations

- Perform CY2019 in-person user testing with agency end user pool

- Resolve remaining UI Refresh user stories

- Participate and help guide tech refresh

- Promote progressive development of the user interface and task flow

- Reduce the effort it takes to change reservations

OBE

- Continue to drive usability improvements to the GetThere online booking engine

- Improve hotel attachment rate

- Reduce the time and user effort it takes to book reservations

- Reduce the effort it takes to change reservations

CWTSato To Go

- Promote mobile app capabilities

Recurring Usability Indicators

Usability has an international standard definition in ISO 9241 pt. 11 (ISO, 1998), which defined usability as the ”extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use.” We further define software usability in terms of the following attributes:

1. Efficiency

- Time taken to complete critical tasks

- Time taken to complete end-to-end processes

- Time taken by users to access online help information for critical areas

2. Effectiveness

- User rates of completing critical tasks

- User rates of abandoning critical tasks

- Rates and types of errors

3. Satisfaction

- Analysis and comments and questionnaire data from usability tests

- Analysis of customer satisfaction survey data

- Analysis of user focus group and interview data

4. Performance

- Front-end website performance

- Browser incompatibilities and bottlenecks

- Page rendering and performance

- User’s perceived site performance

These attributes provide comprehensive measurable ways to assess the usability of the ETS2 E2 Solutions product. Usability tests contain some combination of completion rates, errors, task times, task-level satisfaction, test-level satisfaction, help access, and lists of usability problems including frequency and severity.

There are generally two types of usability tests:

-

Formative tests — discovering and fixing usability problems. The bulk of E2 Solutions’ usability testing is formative. It is often a small-sample qualitative activity where the data takes the form of problem descriptions to inform design recommendations and development priority. Users are provided scenarios or tasks that are not specific, and for the user resolve in the way they prefer.

-

Summative tests — measuring the usability of an application using metrics. Users are provided detailed scenarios or tasks and the usability of the application can be quantitatively measured by counting user errors or deviations from optimal path.

CWTSatoTravel usability reporting metrics of the ETS2 E2 Solutions product include feedback sources such as the self-audit, UX evaluations, help desk logs, and customer discussions that include training sessions, focus and user groups.

Efficiency

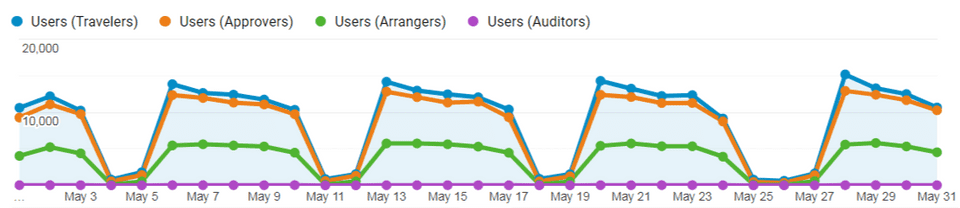

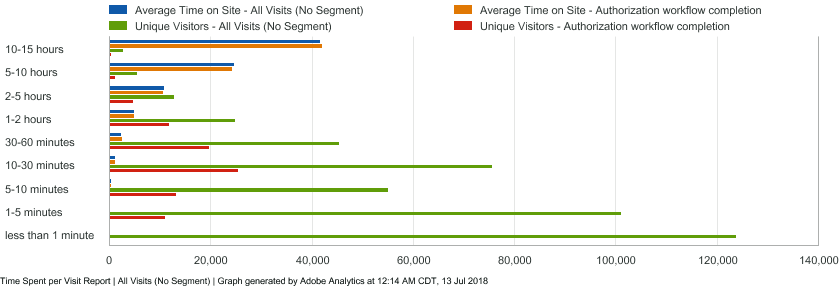

In order to accurately gauge system usability, our team measures and tracks usability indicators and benchmarks over time, and across different user roles. Web analytic tools capture meaningful information generated by production E2 Solutions usage at scale. In addition to efficiency metrics discussed elsewhere in this report, metrics of interest observed during POP include:

- Across user roles, ~30,486 weekday uniques (5.3% increase since last SAUAR) visited up to ~31 pages per session (no change since last SAUAR). No application-wide significant UI refactoring was completed during POP so no changes were expected.

- Travelers completed their tasks on average of ~33 minutes (no change since last SAUAR). This data is referencing the user’s session. More specific end-to-end task workflow metrics are included elsewhere within this report, such as average task completion and fallout for authorizations, vouchers and local vouchers.

- Users checking a document status and then exiting accounted for about 24-30% of site traffic. We are exploring methods to better communicate document status to these users.

- Investments in clear and consistent UI and fast application response should continue to focus on providing gains in the rate of user-work completed per session.

May 2019 – Efficiency Metrics by Role

| Role | Unique Users | Sessions | Sessions per User | Avg. Session Duration | Avg. Page Depth | ||

|---|---|---|---|---|---|---|---|

| Traveler | 114,333 | 30.68% | 393,382 | 36.7% | 3.44 | 00:33:22 | 17.25 |

| Arranger | 27,540 | 7.39% | 202,752 | 18.9% | 7.36 | 00:48:46 | 31.39 |

| Approver | 70,655 | 18.96% | 407,487 | 37.9% | 5.77 | 00:26:45 | 17.81 |

| Auditor | 122 | 0.03% | 890 | 0.1% | 7.30 | 01:38:47 | 43.77 |

| Admin | 219 | 0.06% | 460 | 0.1% | 2.10 | 00:54:44 | 33.76 |

| All Users | 372,719 | 1,072,763 | 2.88 | 00:33:12 | 13.97 |

June 2017 – legacy UI comparison

| Role | Unique Users | Sessions | Avg. Session Duration | Avg. Page Depth | ||

|---|---|---|---|---|---|---|

| Traveler | 97,593 | 63.5% | 362,540 | 51.4% | 00:48:29 | 24.30 |

| Approver | 59,206 | 38.5% | 345,135 | 48.9% | 00:40:17 | 28.25 |

| Auditor | 112 | 0.1% | 1,784 | 0.3% | 01:15:35 | 52.14 |

| Admin | 165 | 0.1% | 958 | 0.1% | 00:40:46 | 47.65 |

| All Users | 153,712 | 705,174 | 00:44:32 | 26.39 |

Tablet and Mobile Users

-

8.16% of monthly users visit from a mobile or tablet device.

- The majority of those devices are tablets, Windows (54%) or iPad (6%).

- The remaining 40% are phones. Mostly iPhones (28%) and Samsung Android phones (7%).

- E2 users appear to welcome further UI refinement to benefit mobile users.

| Mobile Operating System | Users (month) | |

|---|---|---|

| Windows | 18,042 | 59.64% |

| iOS | 10,125 | 33.47% |

| Android | 2,042 | 6.75% |

| BlackBerry | 43 | 0.14% |

| Total | 30,425 % of Total: 8.16% (372,719) |

Effectiveness

CWTSatoTravel and GetThere may evaluate the effectiveness of E2 Solutions application during the POP of this report with User Experience Evaluations. User Evaluation info and results can be found under Usability Audits.

User fallout, exit pages and abandons are discussed in Completion Data, Task Conversion and Abandonment Data.

User Satisfaction — ETS2 Customer Satisfaction Survey Results

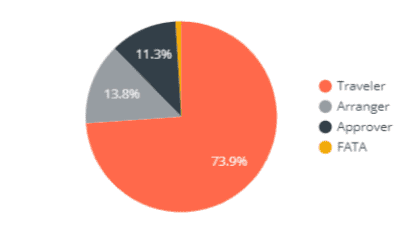

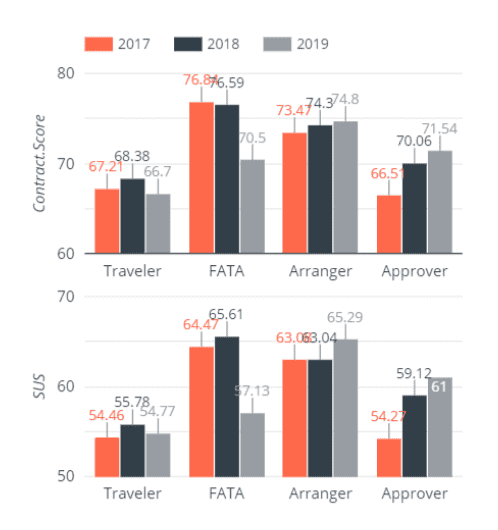

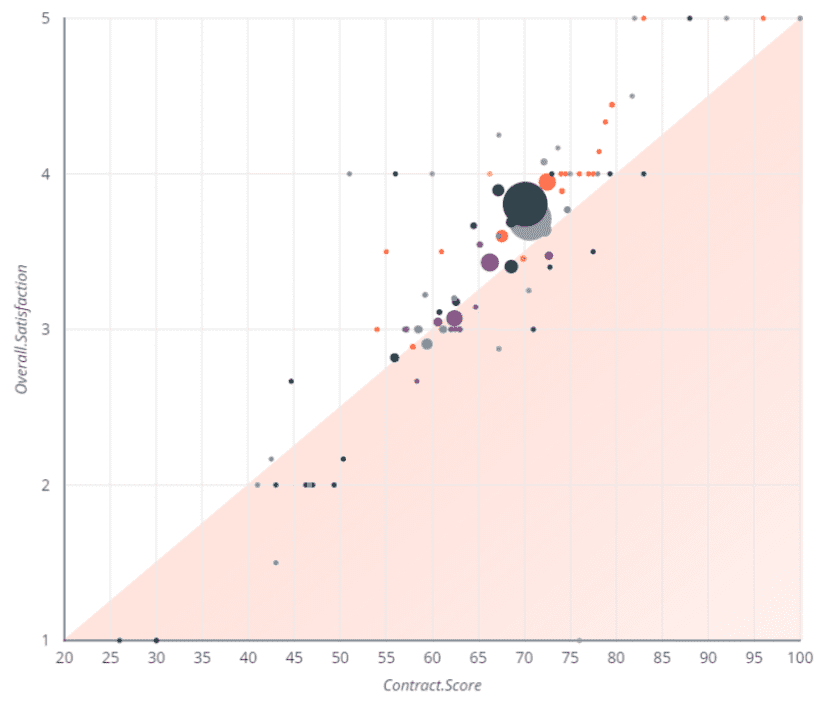

GSA conducted the ETS2 Customer Satisfaction Survey (CSS) using standardized SUS questions and new unscored Fedrooms questions, receiving 2,008 responses. Full results were delivered during this POP and discussed with internal stakeholders. E2 Solutions 2019 Contract Score of 68.40 (down 1%) is considered neutral impact from 2018’s 69.38.

- The drop was a result of a drop in Contract and SUS scores from one overrepresented user role, Travelers, nearly 74% of CSS respondents. When considering respondents to actual application use, Travelers (36.7% E2 session traffic) and Approvers (37.9%) are nearly equally the most active user role by session on E2; followed by 19% from Arrangers. UAM was surprised that observed gains in user efficiency metrics for Travelers (reductions in error rates and time taken to complete critical tasks) had not translated as expected into an increased CSS score.

- Arrangers (14% respondents, 18.9% E2 session traffic) and Approvers (11% respondents, 37.9% E2 session traffic) both reported increased Contract and SUS scores in 2019.

By far, the lowest scoring CSS statement across agencies was “Q8. It is easy to make changes to an existing reservation.” This also was the lowest scoring CSS statement for the previous two years and indicates an area of continued need for improvement.

- Q6. I can book a Travel Reservation (e.g. Flights, Hotels, Rental Car) in a reasonable amount of time. 3.65

- Q7. It is easy to book Travel Reservations 3.47

- Q8. It is easy to make changes to an existing reservation 3.13

- Q9. Overall, I am very satisfied with the travel reservation process 3.41

- Q10. It is easy to initiate/create travel authorizations and vouchers 3.71

- Q11. I can complete my travel authorizations in a reasonable amount of time. 3.70

- Q12. I can complete my travel vouchers in a reasonable amount of time. 3.76

- Q13. The system’s routing process for authorization and voucher approval is acceptable. 3.61

- Q14. Overall, I am very satisfied with the travel authorization and vouchering process. 3.58

- Q25. Considering all of your experiences, how satisfied are you overall with E2 Solutions? 3.60

The two lowest scoring SUS statements indicate users felt the system unnecessarily complex and they needed to learn a lot before feeling adept with using the system:

- I think that I would like to use this system frequently. 3.29

- I found the system unnecessarily complex. 3.04

- I thought the system was easy to use. 3.38

- I think that I would need the support of a technical person to be able to use this system. 3.48

- I found the various functions in this system were well integrated. 3.34

- I thought there was too much inconsistency in this system. 3.34

- I would imagine that most people would learn to use this system very quickly. 3.19

- I found the system very cumbersome to use. 3.17

- I felt very confident using the system. 3.55

- I needed to learn a lot of things before I could get going with this system. 2.99

-

Our UCD/UE team, development, business stakeholders and partners read and categorized every open-ended user comments to weight and guide usability improvement efforts.

- Many of the negative comments received on the survey referred to agency configurations (e.g. too many levels of approval are required; expenses do not map from the authorization to the voucher; financial system rejects too many vouchers, etc.) and business policies versus the E2 Solutions software.

- There often user comments to the effect of “I don’t use the [functionality] so I just scored it as neutral”. As in, respondents are marking a “3” just because an answer to the question is required when they, for example, operate as approvers but do not themselves travel for work, and never personally used functionality such as reservations or vouchers. Users note that an “N/A” response option is not available. UCD/UE team believes not offering a “N/A” response to TAVS/OBE statements skews the CSS score.

- We also see some amount of “I don’t really remember this system” and “I only traveled once months ago and my issues were with the airline” comments, yet the user still submitted a score. There may be some additional questions needed to ask at the beginning of the survey to validate users, and remind them the questions are about the E2 Solutions service and application as the were framed in 2016.

-

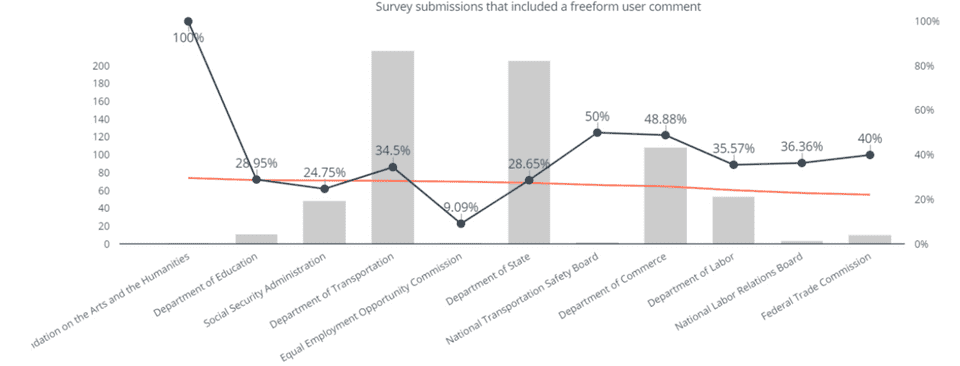

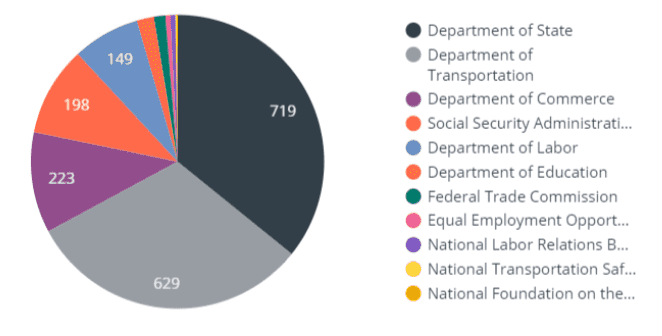

Respondents, especially when relative to an Agency’s voucher transactions, continues to highly favor one or two agencies which potentially distorts scoring across the user population.

- State remains 35.8% of the data, down from 43.4% in 2018.

- DOT & FAA remains 31.3% of the data, up from 26.7% in 2018.

- We request GSA seek increased response from SSA, DoED, DOC, and DOJ in 2020.

- Since 2017, GSA determines the contract score as the average sum of all survey respondent’s answers to the 20 Likert-scored questions. All are weighted equally, yet the results of the Overall Satisfaction question has been shown to be significantly higher than the contract score. Users may be generally unsatisfied with some part of the support, itinerary, policy, or reimbursement but satisfied with the overall product or service.

Tracking Overall User Satisfaction

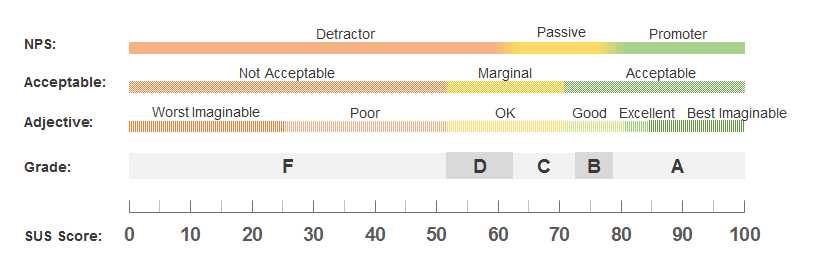

SUS is what Usability.gov recommends for ETS2 monitoring usability metrics over time. E2 Solutions is approaching average SUS: The mean SUS is a 68.

“You’d need to score above an 80.3 to get an A (the top 10% of scores). This is also the point where users are more likely to be recommending the product to a friend. Scoring at the mean score of 68 gets you a C and anything below a 51 is an F (putting you in the bottom 15%).”

To visualize usability scores over time, historic data is charted below for SUS, NPS, GSA Contract Score, and user response to “Overall Satisfaction” survey questions from usability studies and customer satisfaction surveys completed by CWT, GetThere, GSA, and Deloitte. NPS variance appears more sensitive to user opinion due to a higher weight, lower frequency single question and is simplest to gather from volunteers.

When not surveyed during the respective evaluation, SUS and NPS was estimated using the SUS-NPS regression equation and converter by Jeff Sauro and Jim Lewis at Measuring Usability to complete estimates for this graph. The work of Sauro and Lewis are referenced at least three times on usability.gov, including directly from the usability.gov SUS method page. Our Usability Assurance Manager considers SUS and NPS to be somewhat interchangeable. Further, Sauro and Lewis found,

“The percentage of variation in LTR explained by SUS was about 7% (corresponding to a statistically significant correlation of about .606). If you have existing SUS scores from usability evaluations, you can use either of these regression equations to estimate LTR, and from those LTR estimates, compute the corresponding estimated NPS.”

Datapoints in bold below are converted estimates, when one or the other was not available or measured in the particular survey. For future data collection, it is a small effort to collect ratings of NPS in addition to the SUS rather than estimating it, as NPS consists of one single question. It is not practical to gather SUS results with users from prior user evaluations. Where appropriate during usability examinations, UAM will include the 10 SUS statements within exit-question surveys.

| Date | Event | SUS | NPS | Contract Score | Overall Satisfaction | E2 user participants |

|---|---|---|---|---|---|---|

| Oct-14 | GetThere | 81 | 33 | 8 | ||

| Mar-16 | GSA Survey | 62 ¹ | 19.2 | 66 | 64 | 3,246 |

| Apr-16 | CWT UX Evals | 80.1 | 30 | 66 | 70 | 58 |

| Sep-16 | Deloitte ETSNext | 63.5 | -27.0 | 66 | 70 | 33 |

| Apr-17 | GSA Survey | 55.7 | 11.0 | 68.03 | 72 | 1,716 |

| Oct-17 | CWT UX Evals | 80.8 | 33.3 | 68.03 | 72 | 28 |

| Mar-18 | GSA Survey | 57.2 | 17.0 | 69.38 | 73 | 3,704 |

| Sep-18 | GetThere | 84.7 | 34.5 | 69.38 | 73 | 15 |

| Oct-18 | CWT UX Evals | 70.6 | 28.2 | 69.38 | 73 | 9 |

| Apr-19 | GSA Survey | 57.0 | 16.5 | 68.40 | 72 | 2,008 |

\¹ SUS questions were not asked in Mar-16. This result is converted estimate from the answers to ”Q7. How satisfied are you with the look and feel of E2 Solutions?”

Performance

CWTSatoTravel measures and monitors system performance on a recurring basis to diagnose and prevent impacts to the user community.

Server Response Time Monitoring

CWTSatoTravel conducts a synthetic test on web page response times for key steps within the E2 Solutions application workflow as a gauge of system performance. These tests provide a measure of performance independent of factors outside of our control, such as the user’s bandwidth and network latency. CWTSatoTravel monitors the results of these tests to determine if action needs to be taken to address any system performance issues that may negatively impact the user experience with E2 Solutions.

Server Outages

For detailed uptime and outage information, please see E2 Solutions Notification alert emails sent during the POP.

Front-end Optimization (FEO)

Measuring and reporting front-end web performance helps deliver a productive and fast experience. Complex web applications like E2 Solutions and modern browsers have multifaceted interaction, such as AJAX, lazyloading, preloading, async-loading, caching, and responsive design that tailor the application’s user experience. Modern web interfaces are getting heavier and heavier, the median payload for desktop sites is now over 1.8MB analyzing HTTPArchive.org data. The E2 Solutions interface is designed and coded to avoid this trend and keep the application snappy and fast, especially as E2 handles significant OCONUS traffic from DOS locations throughout the world.

CWTSatoTravel continues to expand the awareness and scope of front-end optimization system performance testing and data collection program. The goal is to observe web metrics to give developers and operations staff better awareness and insight into what real users are experiencing and to track front-end optimization results over time.

CWTSatoTravel tracks how design and code changes impact what users see and how quickly they see it on mobile, tablet and desktop. Site waterfall reports, page load time and total download size, network bandwidth utilization, HTTP headers and front-end performance issues are examined and placed into Test Director for resolution (e.g., combine external styles and scripts, leverage browser and proxy caching).

Real-world network waterfalls between common unprimed- and primed-cache browsers that access E2 Solutions have been discussed in previous SAUARs. What follows is tracking of Production web performance events on pages as reported from customer’s browser analytics tracking. This new capability is an accurate average reported automatically from agency locations via browser HTTP connection traffic throughout the world.

Web performance results of the top most viewed screens

E2 Solutions continues to perform within UAM goal of below 2 second page loads, with a speedy overall average page load time of 1.79 seconds. The table below indicates the top 10 most viewed screens within the application and the screen’s worldwide average production performance.

- Document Interactive Time is the time necessary until first paint events have executed and parts of the page are becoming interactive for the user. Some markup, JS and images may remain to be downloaded. This is a common measurement of “perceived” speed, as users can start reading and understanding screen content well before everything is completely loaded.

- Page Load Time is the total time the browser takes to download and execute all HTML, JS, CSS, and other files referenced on the page and return the browser’s initial load() event completion.

| Page Title | Avg. Document Interactive Time (sec) | Avg. Page Load Time (sec) |

|---|---|---|

| CW Government Travel | 1.22 | 1.85 |

| At a Glance | 1.17 | 1.83 |

| Authorization - Summary | 1.43 | 1.95 |

| Pending Approvals | 0.75 | 1.13 |

| Trips | 0.73 | 1.33 |

| Voucher - Summary | 1.43 | 1.94 |

| Trip Dashboard | 1.02 | 1.67 |

| Authorization - Basic Information | 1.01 | 1.47 |

| Authorization - Expenses | 1.85 | 2.56 |

| Overall | 1.21 | 1.79 |

Top 10 slowest loading pages

Reports, Authorization & Voucher Expenses and Accounting screens all appear to have performance optimization needs on screens with high use (pageviews).

| Page Title | Pageviews (month) | Avg. Document Interactive Time (sec) | Avg. Page Load Time (sec) |

|---|---|---|---|

| Reports | 42,638(0.18%) | 0.99 | 4.50 |

| View Documents | 135,657(0.56%) | 1.10 | 3.67 |

| Voucher - Site Details | 9,389(0.04%) | 1.85 | 3.42 |

| Advance - Accounting | 31,450(0.13%) | 2.49 | 2.80 |

| Voucher - Expenses | 397,882(1.65%) | 1.98 | 2.73 |

| Password Maintenance – Initialize Security Information | 33,323(0.14%) | 1.38 | 2.61 |

| Authorization - Site Details | 483,237(2.00%) | 1.66 | 2.59 |

| Authorization - Expenses | 579,397(2.40%) | 1.85 | 2.57 |

| Voucher - Accounting | 313,632(1.30%) | 1.71 | 2.38 |

| Advance - Basic Information | 37,144(0.15%) | 0.86 | 2.38 |

Slowest Page Loads by Agency Location

The table below indicates agency locations that appear to be in most in need of agency IT infrastructure investment. Each of these locations report longer than our target average goal of 2 second roundtrip pageloads during the POP. This information will be provided to agency POCs to help those offices focus IT infrastructure investment.

-

The users at these high-pageview locations appear to be connected to the most restrictive and slow connections, likely behind overloaded network hardware, proxy or software policies, or other localized internet traffic bottlenecks:

- DOC offices in Seattle, WA and Missoula, MT

- DOS offices in Stuttgart, Germany and Atlanta, GA

- EEOC offices in Kansas City, MO and Washington, DC

- DOT offices in Phoenix, AZ

- SSA offices in Woodlawn, MD

- For example, browsers in the DOS offices in Atlanta (3.39 sec) report a slower average roundtrip latency to the E2 Solutions datacenter in Las Vegas than from the DOS office in Addis Ababa, Ethiopia (2.71 sec).

CWTSatoTravel can drill down into this data to report on the specific subagency code(s) for agency customers investigating these problems.

| City | Agency Name (alphabetical) | Pageviews (by location) | Avg. Page Load Time (sec) |

|---|---|---|---|

| Seattle | Department of Commerce | 107350 | 6.46 |

| Missoula | Department of Commerce | 68838 | 4.76 |

| Chicago | Department of Commerce | 6992 | 2.37 |

| Jeffersonville | Department of Commerce | 238348 | 2.29 |

| San Diego | Department of Justice | 20291 | 2.35 |

| New York | Department of Justice | 3498 | 2.24 |

| Stuttgart | Department of State | 46568 | 6.63 |

| Atlanta | Department of State | 7239 | 3.39 |

| Seoul | Department of State | 2510 | 3.04 |

| Addis Ababa | Department of State | 14074 | 2.71 |

| Coffeyville | Department of State | 1622093 | 2.05 |

| Woodlawn | Department of State | 9603 | 2.03 |

| Phoenix | Department of Transportation | 2363 | 2.95 |

| Ontario | Department of Transportation | 5421 | 2.34 |

| Olympia | Department of Transportation | 4730 | 2.09 |

| Kansas City | Equal Employment Opportunity Commission | 49699 | 5.28 |

| Washington, DC | Equal Employment Opportunity Commission | 27907 | 3.19 |

| Oklahoma City | Federal Aviation Administration | 1584045 | 2.06 |

| Chicago | Railroad Retirement Board | 17917 | 2.27 |

| Woodlawn | Social Security Administration | 431958 | 2.7 |

| Catonsville | Social Security Administration | 42344 | 2.46 |

| Kansas City | Social Security Administration | 58551 | 2.36 |

| Washington, DC | U.S. Office of Personnel Management | 235123 | 2.01 |

FEO Observations

- The IE11 JavaScript engine is not robust which penalizes site speed and responsiveness. JavaScript files also download and execute sequentially in IE, not in parallel as in other browsers. CWTSatoTravel observes users migrating away from IE11 as Agency IT policy allows for browser choice.

- Users returning to E2 Solutions within 30 days should have all UI-necessary external images, styles, and JavaScript files cached — unless they have cleared their browser cache. UAM recommends this could be extended to 90 days, but is also managed downstream by agency caching appliances and load balancers.

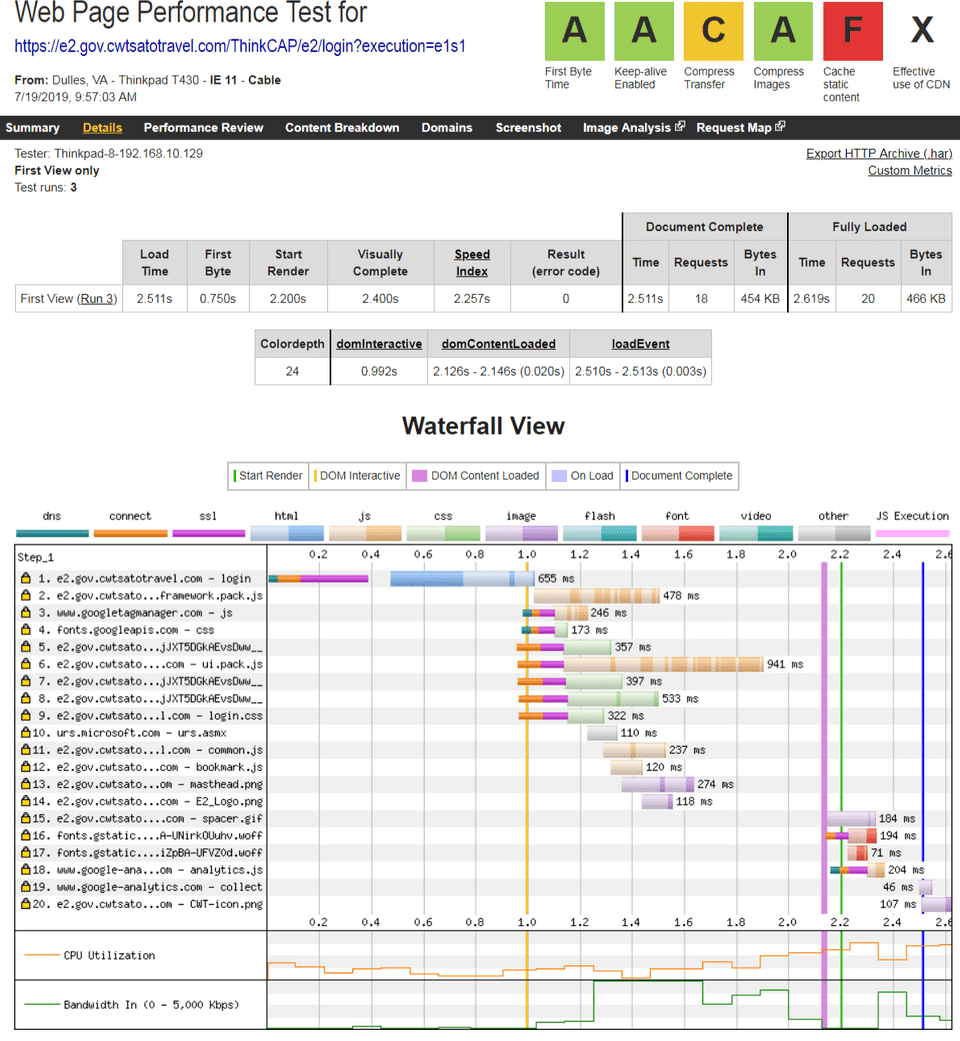

Webpagetest.org rating and network waterfall asset graph for the E2 login screen

-

The webpagetest.org “F” grade below for cacheing is from 6 remaining external files not being marked as cacheable for at least 30 days. UAM has added a defect notice to update E2’s webserver configuration to mark these files as cacheable in browser headers. Without these header markings, the 6 file(s) needlessly download with each returning page load.

-

The “C” grade below for compression is from some, but not all, traffic not commonly gzipped by E2’s webservers. For optimal speed, developers want to reduce both the volume and size of traffic sent over the wire.

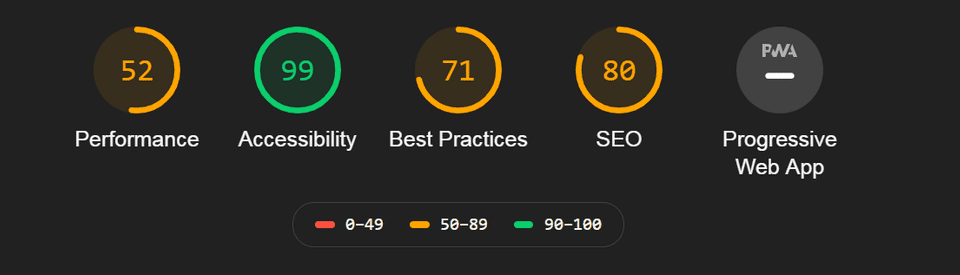

Lighthouse Scoring for E2 Solutions

Google Lighthouse is a open-source, automated tool for improving the quality of web applications. Lighthouse tests any web page and returns a series of scores for performance, accessibility, search engine optimization (SEO), and other industry best practices for web applications, assigns a weighted 0-100 score for adherence to each category, and provides a report to help developers resolve issues found. Lighthouse score metrics do evolve over time, as Google often updates their best practices and performance goals for web applications. CWTSatoTravel endeavors to track and improve upon these industry recommendations. What follows are the dashboard summary results for audit of the E2 login screen.

For more information about the Lighthouse tool and how to use it, please visit: https://developers.google.com/web/tools/lighthouse/

| Date/Document | Performance | Accessibility | Best Practices | SEO | Avg. |

|---|---|---|---|---|---|

| SAUAR 4.2 | 54 | 93 | 73 | 78 | 74.5 |

| 18 NOV 2018 | 59 | 82 | 79 | 78 | 74.5 |

| SAUAR 5.1 | 52 | 99 | 71 | 80 | 75.5 |

Findings from Usability Evaluations

Usability & Accessibility Audits

An internal accessibility audit of the modern profile was performed during the POP. Findings were provided to the development team for remediation.

Moderated Remote Testing of New User Profile

CWTSatoTravel performed moderated remote user testing during the POP. Three individual users from DOJ, DOS, and BLS each spent approximately 20 minutes focusing on the redeveloped User Profile to help identify any issues with the new angular-based modernized user input pattern. Interview notes, recommendations and screen recordings were posted to the development team for remediation.

Information Architecture Prototypes & Wireframes

Online information architecture evaluations for Mobile Shop First and User Profile sections are under evaluation with internal stakeholders.

Considerations regarding obtaining federal employee usability study participants

Uncompensated agency volunteers willing to leave their work tasks to altruistically participate in a study are difficult to find. CWTSatoTravel often arranges to meet federal users at their own work location or building. In the private sector and from government-to-government, it is common to compensate usability study participants for their time, and further for government researchers to compensate public participants. However, there are many factors that prohibit private sector compensating federal employees. Usability.gov states:

“If your participants are federal employees, you cannot pay them for their time.”

However, the ETS2 Master Contract requires vendors to periodically test representative users:

Section C.3.3.1 2) a. “Periodically conducting usability tests involving representative users. Such testing should occur in conjunction with the Contractor’s software development life cycle, prior to any release that involves changes to the ETS2 user interface;”

E2 Solutions target audience/participants are geographically dispersed, requiring travel for them or the experimenters. Remote testing offers an opportunity to administer testing with a larger and diverse group of people than we might be able to accommodate in any lab environment at a vendor or agency, eliminating both the need for a lab environment and the effect of a lab environment on participants, while accommodating a more diverse groups of participants. Un-moderated testing further allows access to a larger pool of participants not possible with limited proctors. However, administrative or technical blocks from full participation were found to be common with agency users — such as inability to install browser plugins or webinar software due to IT restrictions.

Although agency travelers are requested, in-person interviews often skew towards arrangers and administrators who tend to first hear about the opportunity to provide vendor feedback. Obtaining volunteers representative of the user population and personas for varied testing continues to be a challenge to meeting this Master Contract requirement.

Analysis And Reviews of Click-Stream Web Logs, Including Error Conditions, online Help Access Incidents, and Task Times and Completion/Abandonment Data

Error Conditions

Document error rate is as important to understand as the average time of document completion; our goal is to reduce both. CWTSatoTravel seeks opportunity to expand methods of tracking and reporting key tasks for which there is a linear path to “complete” the task, and report on common workflow tasks that can be tracked over time. Error conditions may occur at page level, server level, or task and document level and consideration to reporting scope is necessary. Server, document and task errors are well tracked and UAM is looking at methods to track and report page-level errors, such as form and input user error, in E2 Solutions’ web analytics package.

Task error rate of users testing prototype interfaces are commonly gathered in UX evaluations. Errors observed when completing tasks through user evaluations is extensively reported in previous SAUARs or usability session results presentations. Error rate evaluations guide redevelopment efforts and help measure the impact of improvements.

Online help access incidents

E2 Solutions online help software, the E2 Knowledge Portal, is built upon Oracle Web Services (OVsC).

Top 20 Complex Help Page Topics

The following are the top 20 help page topics that caused a user to start a chat session or send an email to a help desk analyst. This indicates sections of E2 Solutions where users may need additional assistance, or that the help documentation for that section needs to improve user communication and direction.

| Answer ID | Summary | # Immediate Ask a Question | # Ask a Question | # Immediate Chats | # Chats | Total |

|---|---|---|---|---|---|---|

| 3957 | Help for voucher Lodging Expenses window | 3 | 15 | 4 | 15 | 37 |

| 4175 | Reservations: Changing reservations / Cancel Reservations | 1 | 14 | 7 | 15 | 37 |

| 4069 | Authorizations | Vouchers: Closing a trip / change voucher type to final voucher | 1 | 21 | 2 | 5 | 29 |

| 4070 | Authorizations | Reservations | Vouchers: Cancel trip | 3 | 16 | 1 | 7 | 27 |

| 2870 | Training: Computer Based Tutorials (CBT) | 1 | 12 | 2 | 11 | 26 |

| 3965 | Help for authorization or advance Account Code Selection window | 3 | 6 | 3 | 9 | 21 |

| 3980 | Help for authorization Manage Trip Reservations window | 3 | 8 | 2 | 8 | 21 |

| 4054 | Voucher: Creating and submitting a voucher | 1 | 14 | 1 | 3 | 19 |

| 4172 | Authorizations | Vouchers: Change approver / change approval routing for a document | 1 | 4 | 3 | 11 | 19 |

| 3968 | Help for authorization Basic Information step | 0 | 2 | 2 | 14 | 18 |

| 1541 | Administration: Create user account | 3 | 9 | 1 | 4 | 17 |

| 3970 | Help for authorization Reservation step | 0 | 2 | 2 | 13 | 17 |

| 3973 | Help for authorization Summary step | 0 | 1 | 5 | 10 | 16 |

| 4032 | Help for Itinerary window | 0 | 0 | 3 | 13 | 16 |

| 3943 | Glossary: TMC fee amounts - how to obtain current TMC fees | 0 | 2 | 4 | 9 | 15 |

| 4053 | Authorizations: Amending an authorization | 0 | 1 | 2 | 12 | 15 |

| 3971 | Help for authorization Site Details step | 0 | 3 | 5 | 6 | 14 |

| 3846 | Training: User and Administrator guides, Quick Reference Cards | 3 | 6 | 0 | 4 | 13 |

| 4117 | Authorization: Delete or cancel authorization | 0 | 8 | 0 | 5 | 13 |

| 2685 | Glossary | Vouchers: Voucher transaction fee (VTF) | 1 | 3 | 2 | 5 | 11 |

Most visited Help and Documentation topics

The following remain top Help and Documentation Topics by “visit.” Reporting trends among the most used help topics indicate areas of the site where users are having the most difficulty. Each of these topics continue to remain high access and also nearly mirror E2 Solutions’ help desk call topic volume:

- Changing Reservations

- Help for Expenses, Help for Lodging Expenses

- Creating and Submitting a Voucher

- Help for Remarks

- Manage Trip Reservations

- Login Assistance > Login Instructions / Account Locked

Help and Documentation average duration

The following are the top 20 Help and Documentation Topics, average and total duration spent on the answer, in seconds, during this POP. These help indicate the most complex sections of E2 Solutions.

| Answer ID | Summary | Visits | Time on Answer (sec) | Total Time (sec) |

|---|---|---|---|---|

| 1309 | Contact CWTSatoTravel | 11335 | 75.13 | 851615 |

| 2870 | Training: Computer Based Tutorials (CBT) | 2295 | 194.81 | 447078 |

| 3846 | Training: User and Administrator guides, Quick Reference Cards | 1070 | 171.19 | 183177 |

| 3965 | Help for authorization or advance Account Code Selection window | 2662 | 53.86 | 143364 |

| 4175 | Reservations: Changing reservations / Cancel Reservations | 1103 | 109.16 | 120404 |

| 3957 | Help for voucher Lodging Expenses window | 1184 | 71.37 | 84499 |

| 3970 | Help for authorization Reservation step | 1142 | 70.16 | 80121 |

| 3980 | Help for authorization Manage Trip Reservations window | 1223 | 60.96 | 74557 |

| 4032 | Help for Itinerary window | 1506 | 47.02 | 70817 |

| 4053 | Authorizations: Amending an authorization | 684 | 88.98 | 60860 |

| 3954 | Help for authorization Lodging Expenses window | 597 | 98.71 | 58932 |

| 4070 | Authorizations | Reservations | Vouchers: Cancel trip | 659 | 76.64 | 50508 |

| 4259 | Help for Held Reservations window (Show Held Reservations) | 855 | 53.73 | 45935 |

| 4054 | Voucher: Creating and submitting a voucher | 392 | 113.19 | 44372 |

| 4069 | Authorizations | Vouchers: Closing a trip / change voucher type to final voucher | 292 | 150.82 | 44040 |

| 3966 | Help for authorization Add New Expense window | 625 | 65.74 | 41086 |

| 3958 | Help for voucher Meals and Incidental Expenses window | 469 | 74.61 | 34991 |

| 4117 | Authorization: Delete or cancel authorization | 342 | 98.92 | 33829 |

| 4216 | Advances | Authorizations | Local Travel | OAs and GAs | Vouchers - Recall document pending approval | 406 | 82.27 | 33400 |

| 3523 | Reservations: Get a copy of reservation invoice, print invoice, print itinerary | 482 | 68.63 | 33081 |

| 4172 | Authorizations | Vouchers: Change approver / change approval routing for a document | 402 | 80.96 | 32547 |

| 3955 | Help for authorization Meals and Incidental Expenses window | 358 | 90.85 | 32524 |

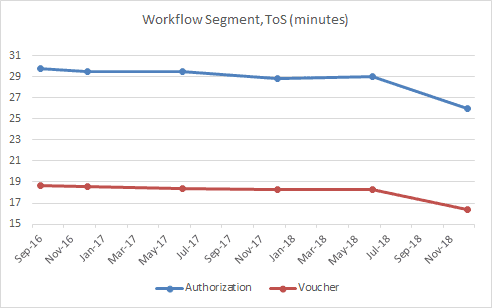

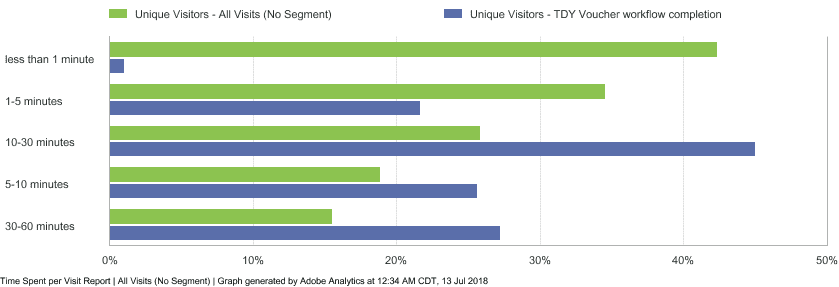

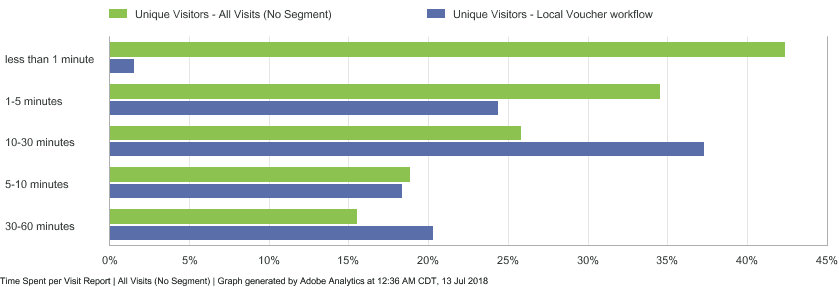

Task times

- Average time on site within Authorization and Voucher workflow, removing top and bottom outliers.

- Authorization workflow, details

- Voucher workflow, details

- Local Voucher workflow, details

Completion Data, Task Conversion and Abandonment Data

-

The UCD/UE team continues to investigate metrics around touchless bookings and rejected trips. It should be noted that the touchless rate is dependent on several factors other than usability, including trip complexity and agency policy (e.g., some agencies require all reservations that may result in the exchange of an unused ticket to be booked offline). The rejected document rate is dependent on outside factors as well, such as financial system and funding availability, as well as agency and departmental policy. While it is possible to expand this to a more granular level, it is not a trivial endeavor and reporting that requires development resources is weighted among other usability product improvement efforts.

-

Frequency of use increases satisfaction as is common and expected. Higher number of trips also similarly increases satisfaction.

-

Across document types, 12-18% of those users exit the workflow while estimating or declaring expenses, a common source of user frustration and area where some users need to complete offline tasks such as gathering their receipts.

-

Goal-conversion metrics for Authorizations, Vouchers, and Local Vouchers follow below. Of note:

-

Authorization workflow

- In the Authorization process, ~20-25% of users appear to window-shop: the user leaves the site after creating reservations or returning from the OBE without further completing the Authorization during the session.

- 46.8% of users start their Authorization and submit to an Approver on the first try.

-

Voucher workflow

- 60.2% of users complete their TDY Voucher on the first try.

-

Local Voucher workflow

- 57.2% of users complete their Local Voucher on the first try.

-

E2 Help Desk Incident Reports

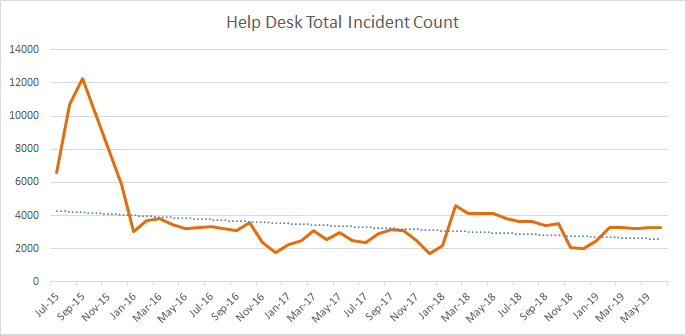

CWTSatoTravel’s E2 Help Desk Incident reports are included in the Appendix. Observations of this information include:

-

Calls to unlock user accounts and other profile issues have become the highest cumulative caller request.

-

Assistance with creating or managing reservations continues near the top of caller requests.

-

Many ETS2 end users do not have direct access to CWTSatoTravel’s help desk, as they first interact with their own Agency Tier 1 help desk. This means CWTSatoTravel’s Help Desk reports may not contain the majority of “end-user” incidents for ETS2. Overall, the CWTSatoTravel Help Desk total incident counts remains steady, around 3,000 calls per month.

Top 12 Help Desk call topics, by Agency (May 2019)

Category DOC DOL DOT FAA SSA Total E2 Profile > General 105 94 39 112 3 353 Administration > Administer Users 105 115 21 60 0 301 Vouchers > General 101 104 19 28 1 253 Authorization > General 29 205 1 11 4 250 Reservation > Create/Cancel/Modify 20 176 12 11 5 224 Reservation > General 32 148 7 9 1 197 Authorization > Reservations 12 107 5 4 2 130 Other > Site Feedback 28 17 12 36 6 99 Authorization > Basic Info/Workflow 9 73 4 4 1 91 Administration > General 27 24 9 18 2 80 Reservation > Itinerary/Receipt/Fees 6 67 0 4 1 78 Vouchers > Expenses 6 67 0 2 0 75 Help Desk Total Incident Counts

Feedback from users during ETS2 training

Task completion rate, time monitoring, and satisfaction are surveyed from attendees of training provided by CWTSatoTravel. Trainers follow best practice of documenting any issues identified during training sessions. These issues are evaluated and logged in our defect tracking software where warranted.

- No usability issues were reported to UAM by trainers during this reporting period.

Progress Mitigating Usability Issues in the SDLC

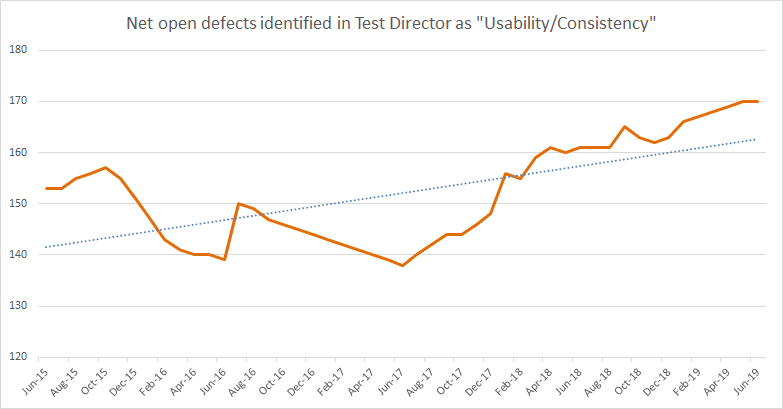

User experience problems (name, description, and severity rating) are stored as common and understandable terms within defect tracking software, Test Director and Rally, accessed by CWTSatoTravel’s Customer Service Center, developers, and product support teams.

A common technique for assessing the impact of a problem is to assign impact scores according to the severity and occurrences of the problem. What follows is an ongoing usability defect remediation net-open defects chart in E2 Solutions’ Software Development Lifecycle. Some fixes may or may not yet be built into the Production baseline as these counts are reported directly from the Testing and Development Team’s defect tracking software. The following details net counts for remaining open defects identified in Test Director labeled as “Usability/Consistency”:

Unresolved “Usability” or “Consistency” items are classified into the following categories according to our defect prioritization system. No Block issues were reported nor remain unresolved.

| Severity | Net Open Defects | % Total | Severity Description |

|---|---|---|---|

| Trivial | 21 | 12% | Idea, suggestion, or observation that could enhance the overall experience |

| Minor | 98 | 58% | Minor effect on task performance, delays user briefly, cosmetic |

| Major | 53 | 30% | Significant delay or frustration but allows user to complete the task |

| Block | 0 | 0% | Prevents task completion |

| Total | 170 | 100% |

Method of Determining Severity Ratings for Usability Problems

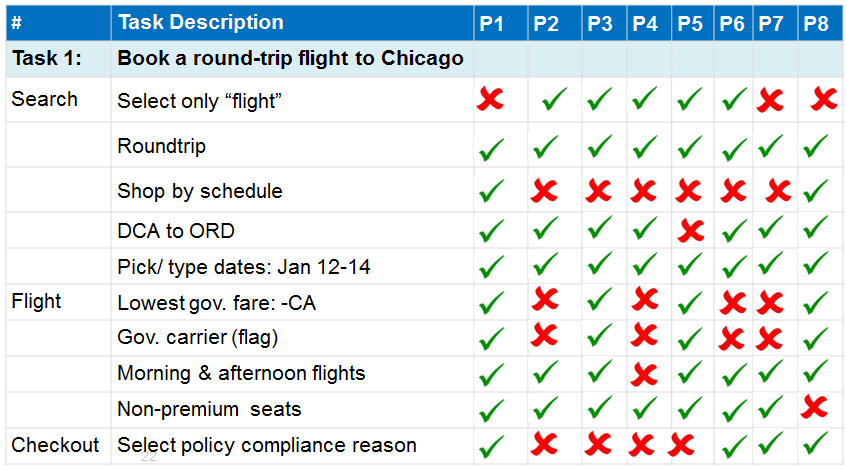

If a user encounters a problem while attempting a task and it can be associated with the interface, it is considered a user interface problem. The usual method for measuring the frequency of occurrence of a problem is to divide the number of occurrences within participants by the number of participants. From an analytical perspective, a useful way to organize UI problems is to associate them with the users who encountered them, as shown in this example of a UI Problem Matrix:

Recording Error Rates

Errors are any unintended action, slip, mistake, or omission a user makes while attempting a task. Error counts can go from 0 (no errors) to dozens. Errors provide excellent diagnostic information on why users are failing tasks and, where possible, are mapped to UI problems. Errors are assigned binary measures: the user either encountered an error (1 = yes) or did not (0 = no).

Task Time

Task time is how long a user spends on an activity. It is most often the amount of time it takes users to successfully complete a predefined task scenario, but it can be total time on a web page or call length and is typically reported as an average. There are several ways of measuring and analyzing task duration:

- Task completion time — time of users who completed the task successfully

- Time until failure — time on task until users give up or complete the task incorrectly

- Total time on task — the total duration of time users spend on a task

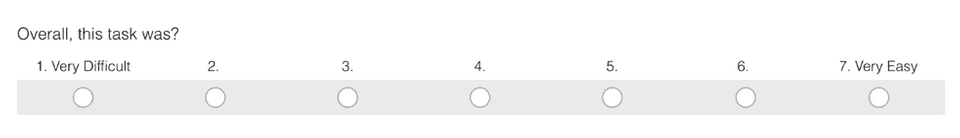

Task Satisfaction Ratings

Asking a question on how difficult users thought a task was to confidently complete is valuable when comparing to task completion rates. Even if task completion is at 100%, we also need to understand if users considered the task easy or difficult, and compare that to their expectations. By improving this mean satisfaction rating over time for a particular task one can measure how updated designs improved the user experience. Such subjective measures are especially helpful when evaluating tasks, even those with high “successful” completion rates. For example, a 100% completion rate cannot be improved, but a task that users struggle to complete and thought was difficult can be improved.

Follow-up Single Ease Question (SEQ) post-task questionnaires measure the perception of the ease of use of a system are commonly completed immediately after a task (post-task questionnaires), at the end of a usability session (post-test questionnaires), or outside of a usability test. Here is an example of a post-task survey SEQ:

Determining Combined Scores

In general, users who quickly complete tasks tend to rate tasks as easier. Yet some users fail at tasks and still rate them as being easy, or others complete tasks quickly and report finding them difficult. Although usability metrics often significantly correlate, they don’t correlate strongly enough that one metric can replace another, or stand alone. Reporting on multiple task metrics can be cumbersome over time, so it can be easier to combine metrics into a combined usability score.

Further, combined usability score is a beneficial tool to use with non-design stakeholders. The simplicity and influence of a numerical score helps ensure usability research is digestible and impactful at a glance.

UAM adopted 10-question System Usability Scale (SUS), as well as the single-question NetPromoter™ (NPS), for tracking and measuring useful overall score(s) for usability in user evaluations or surveys. SUS and NPS have a strong positive correlation and can be used to forecast one another.

System Usability Scale (SUS)

A well-known questionnaire used in user experience research is the System Usability Scale. SUS provides a proven and reliable tool for measuring the usability of an application. It consists of a 10 item questionnaire with five response options for respondents; from strongly agree to strongly disagree. The participant’s scores for each question are added together and then multiplied by 2.5 to convert the original scores to 0-100. Though the scores are 0-100, these are not percentages and should be considered only in terms of their percentile ranking vs. industry peers. The average SUS score (at the 50th percentile) across industries is 68.

NetPromoter (NPS)

NetPromoter™ is a well-established general bellwether even if it is not as sensitive to UX-focused concerns. NPS is a single question about someone’s likelihood to recommend (LTR) a product:

“How likely are you to recommend this product to a friend or colleague?”

The response option is an 11-point scale numbered from 0 to 10 with higher numbers indicating higher likelihood to recommend. Promoters are those who choose a 9 or 10, detractors are those who choose anything 6 and below, with the 7-8’s as passive. The “net” in NetPromoter comes from how to score the response. NetPromoter is measured on a -100 to 100 scale. Subtract the percentage of detractors from the percentage of promoters to get the Net Promoter Score.

Periodic Review of User Types, User Tasks, and Use Contexts

It is important for the UCD/UE to periodically review the critical goals of the ETS2 E2 Solutions product to ensure that E2 Solutions’ design and usability features take account of the evolving end-to-end travel process, not only for the needs of government travelers, but also travel arrangers and travel approvers.

Personas

Personas add a real-world dimension when thinking through how users interact with the system, help focus usability design decisions, and test and prioritize features throughout the development process. Information collected from team members, and throughout various usability tests and assessments, can be used to update Persona characteristics, such as personal demographics, professional traits, and technical capabilities. Through the ETSNext initiative, CWTSatoTravel and ETS2 PMO Personas were merged to provide reliable and realistic representations of key users.

Critical User Tasks

Starting with the use cases provided by the ETS2 PMO as a baseline, the ETS2 E2 Solutions Team periodically updates critical user tasks within the ETS2 E2 Solutions application. Once the critical goals of the ETS2 E2 Solutions product are re-assessed, data can be analyzed from usability assessments to determine if critical tasks have changed, added, or become obsolete. This can be performed by periodically asking questions described on usability.gov, such as:

- What will users do when using E2 Solutions? (User tasks, content, features and functionality)

- Which tasks are critical to users’ success on E2 Solutions? (Criticality)

- Which tasks are most important to E2 Solutions users? (Importance)

- Which features of the E2 Solutions will users use the most? (Frequency)

- Which features are prone to usability issues? (Vulnerability)

These questions can be answered against user Personas, as previously described.

Appendix – E2 Help Desk Incident Reports

Monthly Incidents by Category, by Agency

| Category | DOC | DOL | DOT | FAA | SSA | Total |

|---|---|---|---|---|---|---|

| Administration > Administer Users | 105 | 115 | 21 | 60 | 0 | 301 |

| Administration > Financial System | 5 | 51 | 1 | 2 | 1 | 60 |

| Administration > General | 27 | 24 | 9 | 18 | 2 | 80 |

| Administration > Hierarchy and Settings | 2 | 5 | 2 | 2 | 1 | 12 |

| Administration > Manage Documents | 1 | 1 | 0 | 0 | 0 | 2 |

| Administration > Messaging | 4 | 0 | 1 | 1 | 1 | 7 |

| Administration > Routing | 1 | 25 | 0 | 3 | 0 | 29 |

| Advance > Approvals | 0 | 1 | 0 | 0 | 0 | 1 |

| Advance > Delete/Cancel Advance | 1 | 0 | 0 | 0 | 0 | 1 |

| Advance > General | 0 | 5 | 0 | 0 | 0 | 5 |

| Approvals | 1 | 6 | 0 | 0 | 0 | 7 |

| Authorization > Accounting | 1 | 36 | 1 | 2 | 0 | 40 |

| Authorization > Amendments | 5 | 15 | 0 | 1 | 0 | 21 |

| Authorization > Approvals | 24 | 21 | 0 | 3 | 0 | 48 |

| Authorization > Attachments | 0 | 14 | 0 | 3 | 0 | 17 |

| Authorization > Basic Info/Workflow | 9 | 73 | 4 | 4 | 1 | 91 |

| Authorization > Delete/Cancel Authorization | 3 | 46 | 4 | 1 | 1 | 55 |

| Authorization > Expenses | 0 | 65 | 0 | 2 | 0 | 67 |

| Authorization > Financial Interface | 8 | 6 | 0 | 3 | 0 | 17 |

| Authorization > General | 29 | 205 | 1 | 11 | 4 | 250 |

| Authorization > History | 1 | 0 | 0 | 0 | 0 | 1 |

| Authorization > NFS Expense | 0 | 2 | 0 | 0 | 0 | 2 |

| Authorization > Printables | 0 | 1 | 0 | 1 | 1 | 3 |

| Authorization > Reservations | 12 | 107 | 5 | 4 | 2 | 130 |

| Authorization > Routing | 0 | 2 | 0 | 0 | 1 | 3 |

| Authorization > Site Details | 0 | 47 | 0 | 1 | 3 | 51 |

| Authorization > Status | 5 | 4 | 1 | 0 | 0 | 10 |

| Authorization > Summary | 1 | 2 | 0 | 0 | 0 | 3 |

| Authorization > Travel Policy | 0 | 1 | 0 | 2 | 0 | 3 |

| CWTSato To Go > App Navigation | 0 | 0 | 1 | 1 | 1 | 3 |

| CWTSato To Go > Email Verification | 1 | 0 | 0 | 0 | 0 | 1 |

| CWTSato To Go > TMC Related | 1 | 0 | 0 | 0 | 0 | 1 |

| CWTSato To Go > Unable to Login | 0 | 2 | 0 | 1 | 0 | 3 |

| E2 Profile > Account Code | 0 | 4 | 0 | 0 | 0 | 4 |

| E2 Profile > Approval Routing | 0 | 8 | 0 | 1 | 0 | 9 |

| E2 Profile > Credit Card Information | 0 | 9 | 2 | 6 | 0 | 17 |

| E2 Profile > General | 105 | 94 | 39 | 112 | 3 | 353 |

| E2 Profile > Log In | 7 | 20 | 1 | 6 | 1 | 35 |

| E2 Profile > Payment Information | 0 | 12 | 1 | 2 | 0 | 15 |

| E2 Profile > Profile Sync | 4 | 3 | 2 | 5 | 0 | 14 |

| E2 Profile > Reservation Name | 0 | 5 | 0 | 3 | 0 | 8 |

| E2 Reference > Find Your Answer | 4 | 2 | 2 | 2 | 0 | 10 |

| Expenses | 0 | 4 | 0 | 0 | 0 | 4 |

| Group Authorization > General | 0 | 1 | 0 | 0 | 0 | 1 |

| GT Profile > Create Profile | 0 | 1 | 0 | 0 | 0 | 1 |

| GT Profile > General | 12 | 5 | 1 | 2 | 0 | 20 |

| GT Profile > Login Assistance/Unlock Account | 1 | 0 | 0 | 0 | 0 | 1 |

| GT Profile > Subsite Move | 0 | 0 | 0 | 4 | 0 | 4 |

| GT Profile > Travel Preferences | 0 | 7 | 2 | 10 | 0 | 19 |

| Help and Support > Find Your Answer | 1 | 2 | 2 | 5 | 2 | 12 |

| Local Travel > Accounting | 0 | 2 | 0 | 0 | 0 | 2 |

| Local Travel > Approvals | 0 | 2 | 0 | 0 | 0 | 2 |

| Local Travel > Basic Info/Workflow | 0 | 2 | 0 | 0 | 0 | 2 |

| Local Travel > Delete Claim | 0 | 2 | 0 | 0 | 0 | 2 |

| Local Travel > Expenses | 0 | 2 | 0 | 0 | 0 | 2 |

| Local Travel > General | 2 | 12 | 0 | 0 | 1 | 15 |

| Local Travel > Payments | 0 | 1 | 0 | 0 | 0 | 1 |

| Local Travel > Routing | 0 | 1 | 0 | 0 | 0 | 1 |

| No Value | 0 | 2 | 0 | 0 | 0 | 2 |

| Open and Group Authorizations | 0 | 3 | 0 | 0 | 0 | 3 |

| Open Authorization > Accounting | 0 | 1 | 0 | 0 | 0 | 1 |

| Open Authorization > Approvals | 0 | 1 | 0 | 0 | 0 | 1 |

| Open Authorization > Basic Info/Workflow | 0 | 1 | 0 | 0 | 0 | 1 |

| Open Authorization > Financial Interface | 0 | 2 | 0 | 0 | 0 | 2 |

| Open Authorization > General | 0 | 8 | 0 | 0 | 0 | 8 |

| Open Authorization > Travel Details | 0 | 1 | 0 | 0 | 0 | 1 |

| Other > Dropped Contact | 1 | 10 | 0 | 3 | 0 | 14 |

| Other > General | 14 | 14 | 2 | 6 | 2 | 38 |

| Other > Site Feedback | 28 | 17 | 12 | 36 | 6 | 99 |

| Other > System Reponsiveness/Outage | 4 | 4 | 1 | 6 | 1 | 16 |

| Other > Training/User Guides/Release Notes | 1 | 2 | 0 | 0 | 0 | 3 |

| Profile > Modify Profile | 2 | 10 | 0 | 3 | 0 | 15 |

| Reports > Adhoc Reports | 4 | 3 | 0 | 1 | 0 | 8 |

| Reports > General | 1 | 0 | 0 | 0 | 0 | 1 |

| Reports > Standard Reports | 7 | 11 | 1 | 6 | 0 | 25 |

| Reservation > Booking Error | 5 | 18 | 1 | 8 | 3 | 35 |

| Reservation > Create/Cancel/Modify | 20 | 176 | 12 | 11 | 5 | 224 |

| Reservation > General | 32 | 148 | 7 | 9 | 1 | 197 |

| Reservation > Itinerary/Receipt/Fees | 6 | 67 | 0 | 4 | 1 | 78 |

| Reservation > TMC Profile ID | 6 | 2 | 2 | 0 | 1 | 11 |

| Reservations > General | 3 | 56 | 0 | 2 | 1 | 62 |

| —Troubleshoot/Research/Help | 0 | 0 | 0 | 0 | 1 | 1 |

| Troubleshooting and Errors | 0 | 2 | 0 | 1 | 0 | 3 |

| Vouchers > Accounting | 5 | 4 | 0 | 0 | 0 | 9 |

| Vouchers > Approvals | 18 | 16 | 2 | 2 | 0 | 38 |

| Vouchers > Attachments | 1 | 10 | 0 | 1 | 0 | 12 |

| Vouchers > Basic Info/Workflow | 5 | 19 | 2 | 0 | 0 | 26 |

| Vouchers > Delete Voucher | 1 | 8 | 0 | 1 | 0 | 10 |

| Vouchers > Expenses | 6 | 67 | 0 | 2 | 0 | 75 |

| Vouchers > Financial Interface | 5 | 11 | 2 | 9 | 0 | 27 |

| Vouchers > General | 101 | 104 | 19 | 28 | 1 | 253 |

| Vouchers > History | 2 | 1 | 0 | 0 | 0 | 3 |

| Vouchers > Incremental / Final | 4 | 4 | 1 | 3 | 0 | 12 |

| Vouchers > Liquidation | 0 | 1 | 0 | 0 | 0 | 1 |

| Vouchers > Liquidations | 0 | 1 | 0 | 0 | 0 | 1 |

| Vouchers > Override Pay To | 0 | 1 | 0 | 0 | 0 | 1 |

| Vouchers > Paid Amount | 0 | 2 | 0 | 1 | 0 | 3 |

| Vouchers > Payments | 0 | 13 | 10 | 9 | 0 | 32 |

| Vouchers > Printables | 0 | 3 | 0 | 0 | 0 | 3 |

| Vouchers > Remarks | 0 | 1 | 0 | 0 | 0 | 1 |

| Vouchers > Routing | 9 | 1 | 0 | 0 | 0 | 10 |

| Vouchers > Status | 10 | 2 | 2 | 4 | 1 | 19 |

| Total | 684 | 1911 | 179 | 440 | 50 | 3264 |

Trademark and Copyright

This document contains trade secret and confidential business or financial information exempt from disclosure under the Freedom of Information Act.